从prototxt文件抠Caffe-ssd目标检测网络

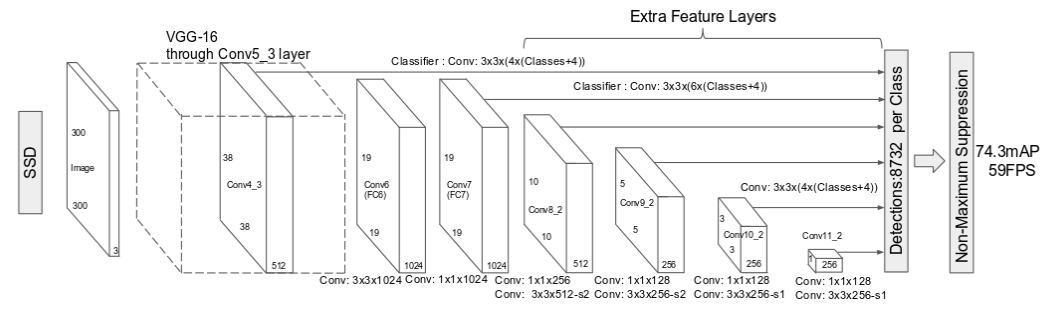

SSD网络结构

上图为论文中的SSD300网络结构,前面的基础网络为VGG16的一部分,后面新添加了若干卷积层。利用conv4-3,conv-7(FC7),conv8-2,conv9-2,conv10_2,conv11_2这些不同的feature maps生成不同大小、不同宽高比的Defalut Box(Prior Box),在多个feature maps上同时进行softmax分类和位置回归.

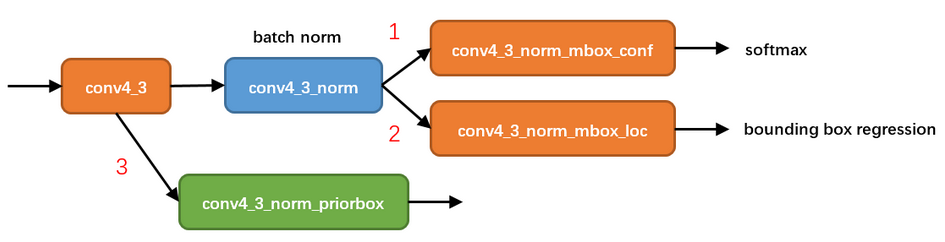

Prior Box的产生与使用

以conv4_3层为例子.conv4_3层产生Prior Box,进行分类和回归的示意图如下

prototxt文件中与conv4_3相关的层的信息如下

在上图线路(2)中,网络输出[dxmin,dymin,dxmax,dymax],即对应下面代码中bbox;然后利用如下方法进行针对prior box的位置回归

|

|

训练与损失计算

匹配方法

在训练时,groundtruth boxes与prior boxes按照如下方式进行配对:

1.首先,寻找与每一个ground truth box有最大的IoU的default box,这样就能保证每一个groundtruth box与唯一的一个default box对应起来.

2.SSD之后又将剩余还没有配对的default box与任意一个groundtruth box尝试配对,只要两者之间的jaccard overlap大于阈值,就认为match(SSD 300阈值为0.5)。

显然配对到GT的default box就是positive,没有配对到GT的default box就是negative。

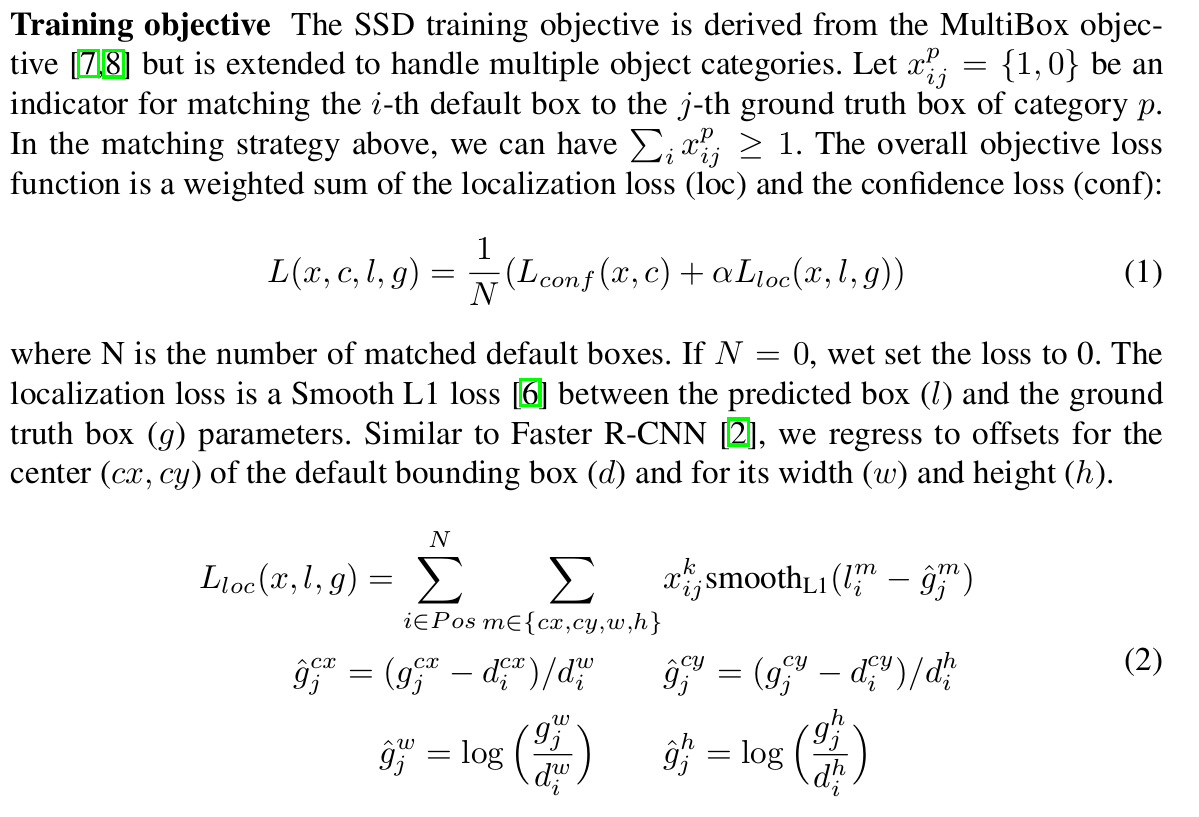

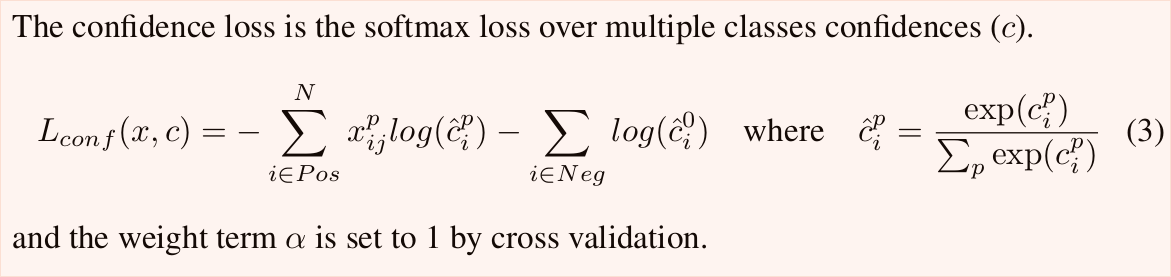

损失函数

SSD loss分为confidence loss和location loss两部分,其中N是match到GT(Ground Truth)的prior box数量;而α参数用于调整confidence loss和location loss之间的比例,默认α=1.

实现

|

|

参考文章

【1】CNN目标检测(三):SSD详解

【2】SSD paper